Have you ever thought how good it would be if hosting an application online were as simple as running it on your localhost:3001?

You’ve probably also wondered: “Why do we need this whole deployment process — and especially those complicated Kubernetes manifests — to get an app online?”

And most importantly, “Why do we need Kubernetes in the first place?”

The reason is simple – Kubernetes allows you to host your application reliably and scale it to handle high traffic.

To understand how, let’s take a quick look at how application hosting has evolved.

Physical Servers – Internet Powered From Your Closet

Earlier in the day, if you had to run your application and serve it on the internet, you had to get your hands dirty and configure an entire server from scratch.

This meant configuring your PC (or a dedicated server, if you are rich enough), installing a web server software or running your own web server, plugging it into the network, and serving traffic from your home.

People usually had these lying around in their offices, separate server rooms, or their closets – more like modern-day startups.

Imagine the pain of running your application this way. You now not only need to build your application but also have to configure an actual server, ensure good internet connectivity, and make sure that it has an electric supply 24×7

But what if your application took off?

Well, you’ll go to the market, get a new computer, and configure it the same way all over again.

And what about deploying a newer version of your app or just fixing a bug? You would have to update the code on the server in-place (because blue-green or canaries would have meant more “idle” physical servers) and hope that the change does not take your application down.

This was a painful, lengthy, and very difficult process to scale for someone who was just starting out and did not have enough expertise and capital.

Problems faced

- Scalability – Extremely difficult and expensive

- Difficult to start and get a change up and running

- A bad application version can essentially take down the application

Cloud – Building The Modern Internet

With the advent of cloud technologies in the 2000s, it became easier to deploy changes on servers. You no longer needed to have physical servers, nor did you have to worry about the electric supply, internet connection, or network connectivity.

You can simply spin up a new machine, install the server, and run your application.

It did reduce the capital and expertise required to publish your app online; however, it still required you to ensure that servers were always up and running.

Making a change to your codebase was still mostly in-place since it was time-consuming and complex to have blue-green and canary deploys.

But since the development environment and the actual production environment are configured differently, a new problem arose – It works on my system!

This is a classic software engineering problem where the application works on the local system of the developer but not on the production server.

This was mainly because there are a lot of different OS level configurations, as well as installation configurations/steps for default packages, which makes the difference in the way the application works.

Another problem that people faced was replacing unhealthy servers. A faulty or crashed server usually meant a lot of configuration changes to be done on a new server, and then deploying the application there. This took a lot of time to get back to the original capacity in case of failures.

There are a lot of sophisticated solutions for most of these nowadays, like Auto-Scaling Groups, AMIs, etc, but back in the day, these were major issues.

These solutions, even today, are slower and more complex than the way things evolved, as discussed in the upcoming sections.

Problems faced

- “It works on my system!”

- Difficulty in scaling due to the configuration and costs of setting up a new machine each time

- A lot of time is required to recover a lost server

- Deployments were either in-place or took a lot of time

Virtualisation & Containerisation – Formation Of The Modern Web

To get rid of the above-mentioned issues, two major practices were born

- Virtualisation – Being able to serve multiple instances of the application/server on a single physical host

- Containerisation – packaging applications into small containers and deploying them to the web

Out of these two, containerisation is the most commonly used approach by application developers to deploy their code, primarily because it solves the “It works on my system” problem.

One thing to note is that containerisation also uses virtualisation to some extent.

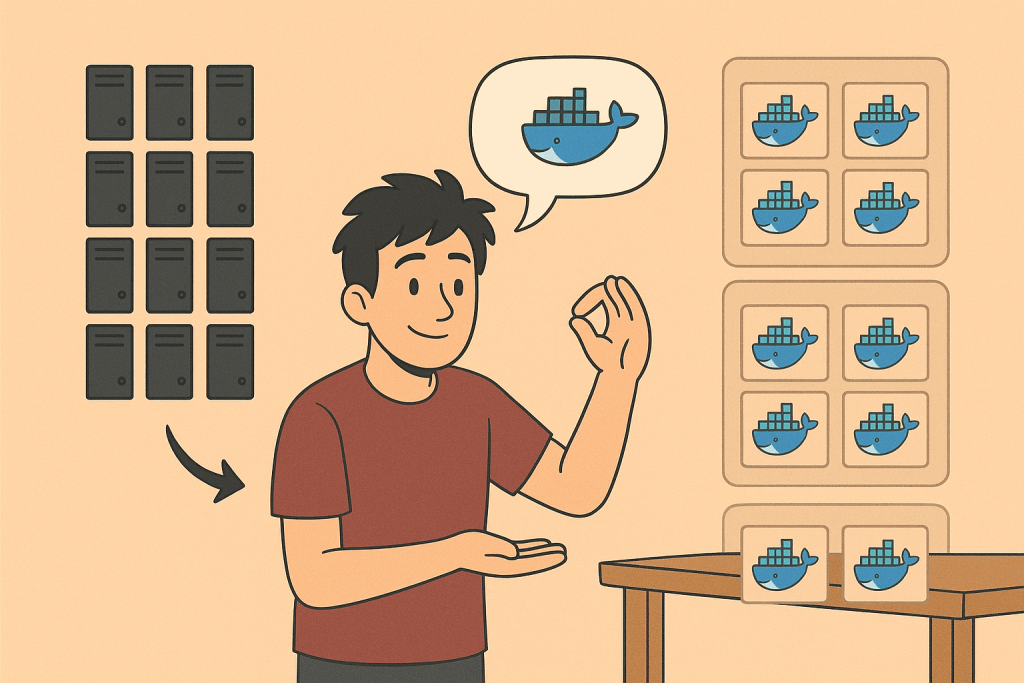

A developer can now simply write a Dockerfile and create an image from it. Then all subsequent testing can be done on containers created via that same image.

Think of the image like a packaged version of an entire server. Whenever you create a container out of an image, you are basically running the server that is packaged inside the image.

Therefore, we now have an artefact which could be tested throughout the software’s lifecycle and then deployed as it is to the end user.

This also meant that we now do not have to do all the configuration for setting up a new server, and the configuration used by the developer on local would be the same as that used in production.

Scaling, therefore, became simpler and was a matter of rolling out new containers.

But, there was still one major problem – what would happen if a container failed? How will we scale the application automatically if the traffic increases? How do we load balance the traffic among multiple containers?

Kubernetes – Orchestrating Containers To Serve Your Application

All of the above problems were solved with the introduction of Kubernetes. It is a container orchestration platform that manages containers and takes care of all the problems, like auto scaling containers (pods to be specific – a pod is a container or a group of containers serving an application), performing rolling deploys for the pods, load balancing pods, ensuring that we always have the required capacity, and so on.

This not only solved all of the problems of cost and scalability, but also ensured that the application is hosted in a self-healing environment, which can take care of itself.

Again, there are a lot of mature solutions to run applications on cloud servers like EC2, but none of them provide the flexibility that Kubernetes provides. However, running your application always on Kubernetes is not always the solution.

Keep in mind that the major advantage of Kubernetes is how easy it makes it to manage the application when you scale and serve a large number of requests. And this gives the exact scenario of when you should not have Kubernetes – if you don’t have 100k requests a day.

If you are not operating at that scale, Kubernetes will add a lot of ops overhead to your team and probably cost, since running and managing a Kubernetes cluster comes with fixed costs in almost all cloud platforms.

Therefore, Kubernetes is not always the solution, but if you are scaling rapidly and have a high influx of requests with a huge application, Kubernetes is probably the way to go forward for you.